Table of Contents

Welcome

This book is born from a simple desire: to give back. After years of working with Zabbix, and authoring previous many other publications about the platform, Patrik and Nathan felt a strong pull to share their knowledge in a way that was accessible to everyone. That's how the initial idea of a free, online Zabbix resource was conceived – a community-driven project dedicated to empowering users.

As the online resource grew, so did the vision. We recognized the potential to create something even more impactful. This led to the formation of a foundation, dedicated to ensuring the long-term sustainability and growth of this community effort. This book, a tangible culmination of that vision, represents the next step. All profits generated from its sales will be reinvested back into the community, enabling us to further expand and enhance the resources and support we offer. This is more than just a book; it's a testament to the power of shared knowledge and a commitment to fostering a thriving Zabbix community."

License

Please note: The english version is the primary source document. Translations are provided for convenience, but this version is considered the most accurate.

Please before you start take a look at our most updated license : License on Github.

The Zabbix Book is a freely accessible resource designed to help users understand and master Zabbix. Contributions are highly encouraged to improve and expand its content. However, the book is distributed under the Creative Commons Attribution-NonCommercial-ShareAlike 4.0 (CC BY-NC-SA 4.0) license, meaning it is free for non-commercial use only.

Contributors should be aware that:

- By contributing to this work, you irrevocably assign and transfer all rights, title, and interest in your contributions to The Monitoring Penmasters Foundation, including any associated intellectual property rights, to the fullest extent permitted by law.

- The Monitoring Penmasters Foundation reserves the right to use, reproduce, modify, distribute, and commercially exploit any contributed material in any form, including but not limited to the publication of physical and digital books.

- All contributors must sign a Deed of Transfer of Intellectual Property Rights before making any contributions, ensuring the proper transfer of rights and handling of the content by The Monitoring Penmasters Foundation. Any contributions without a signed Deed of Transfer of Intellectual Property Rights cannot be accepted.

- All profits generated will be used by The Monitoring Penmasters Foundation to cover operational expenses and to sponsor other open-source projects, as determined by the foundation.

Your contributions are invaluable and will help make The Zabbix Book an even greater resource for the entire community!

Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.

Guidelines

How to contribute

- Sign the deed of transfer preferable electronically

- Clone this project to your Github account

-

Clone the repository to you pc

-

Install the needed software for Mkdocs to work, check the file in the root folder how-to-install-mkdocs.md

- Create a new branch to make your changes

- git branch "<your branch name>"

- git checkout "<your branch name>"

- Make the changes you want and commit them

- git add "files you changed"

- git commit -m "add useful commit info"

- Return back to the main branch

- git checkout main

- Make sure you have the latest changes merged from main

- git pull origin main

- Merge your branch into the main branch

- git merge "<your branch name>"

- git push

- cleanup your branch

- git branch -d "<your branch name>"

- Create a pull requests so that we can merge it :)

- Follow these guidelines when you write a topic.

Tags

Use tags to quickly browse per level.

- beginner — Assumes no or minimal prior Zabbix knowledge. Covers basic concepts, installation, simple use cases.

- advanced — Assumes the reader is comfortable with core Zabbix features and configurations; covers more involved setups, integrations, intermediate complexity.

- expert — Covers high-end topics: scaling, HA, deep performance tuning, custom extensions, edge cases, complex architectures, security hardening.

tags.md:482-504/name

Supporters & Contributors

This book would not have been possible without the dedication, generosity, and expertise of many individuals and organizations. We extend our heartfelt thanks to everyone who has supported this project, whether through financial contributions, technical expertise, content reviews, or community engagement.

Our Sponsors

We are deeply grateful to the sponsors who have provided financial or material support to help bring this book to life. Their contributions have enabled us to maintain high-quality content, support open-source initiatives, and ensure that this book remains accessible to as many people as possible.

- OICTS : https://oicts.com/

- ZABBIX : https://www.zabbix.com/

Our Contributors

This book is a community effort, and we sincerely appreciate the time and knowledge shared by our contributors. From writing and reviewing content to providing feedback and sharing expertise, your efforts have helped shape this resource into something valuable for the monitoring and open-source communities.

- Patrik Uytterhoeven : http://github.com/Trikke76

- Nathan Liefting : https://github.com/larcorba

- Evgeny Yurchenko: https://github.com/BGmot

- Nikolas Garofil: https://github.com/ngaro

- Aigars Kadikis : https://github.com/aigarskadikis

- Giedrius Stasiulionis : https://github.com/b1nary1

- Leonardo Cardoso: https://github.com/leocardosoo

- Robin Roevens: https://github.com/RobinR1

A list of all the contributors who where so kind to fix typos etc .. can be found here : https://github.com/penmasters/zabbix-book/graphs/contributors

Translators

- Nicolas Hermel : https://github.com/wityender

- Dimitry Q : https://github.com/krotesk

- Leonardo Cardoso: https://github.com/leocardosoo

Special Thanks to Our Board Members

A special acknowledgment goes to the members of our board, whose vision, leadership, and dedication have guided this project from its inception. Their commitment to open-source principles and knowledge sharing has been instrumental in making this book a reality.

- Patrik Uytterhoeven : http://github.com/Trikke76

- Nathan Liefting : https://github.com/larcorba

- Alexei Vladishev :

Every Contribution Matters

Open-source thrives on collaboration, and even the smallest contributions help make a difference. Whether it was reporting a typo, suggesting an improvement, opening an issue, or simply sharing feedback, we appreciate everyone who took the time to help refine and improve this book. Your efforts, no matter how small, are a valuable part of this project. Check out Everyone who created an issue.

Join the Community

We welcome new contributors and supporters! If you'd like to get involved whether by contributing content, providing feedback, or supporting this initiative you can find more details on how to participate at Guidelines.

Thank you for being part of this journey and helping us build a valuable resource for the open-source community!

Getting started

Getting Started with Zabbix – Unlocking the Power of Monitoring

Welcome to the world of Zabbix, a powerful open-source monitoring solution designed to give you comprehensive insights into your IT infrastructure. Whether you're managing a small network or overseeing a large-scale enterprise system, Zabbix provides the tools you need to monitor performance, detect issues, and ensure the smooth operation of your services.

In this book, we focus on Zabbix LTS 8.0, the long-term support version that ensures stability and reliability for your monitoring needs. We'll explore its extensive feature set, including the newly introduced reporting functionality and built-in web monitoring based on the Selenium driver, which allows for sophisticated end-user experience monitoring through automated browser interactions.

Zabbix is more than just a simple monitoring tool. It offers a wide range of features that allow you to:

- Monitor diverse environments: Track the performance and availability of servers, virtual machines, network devices, databases, and applications.

- Create dynamic visualizations: Use dashboards, graphs, maps, and screens to visualize data and get an overview of your system's health at a glance.

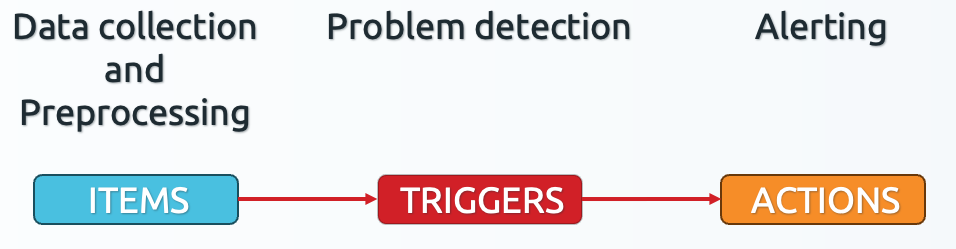

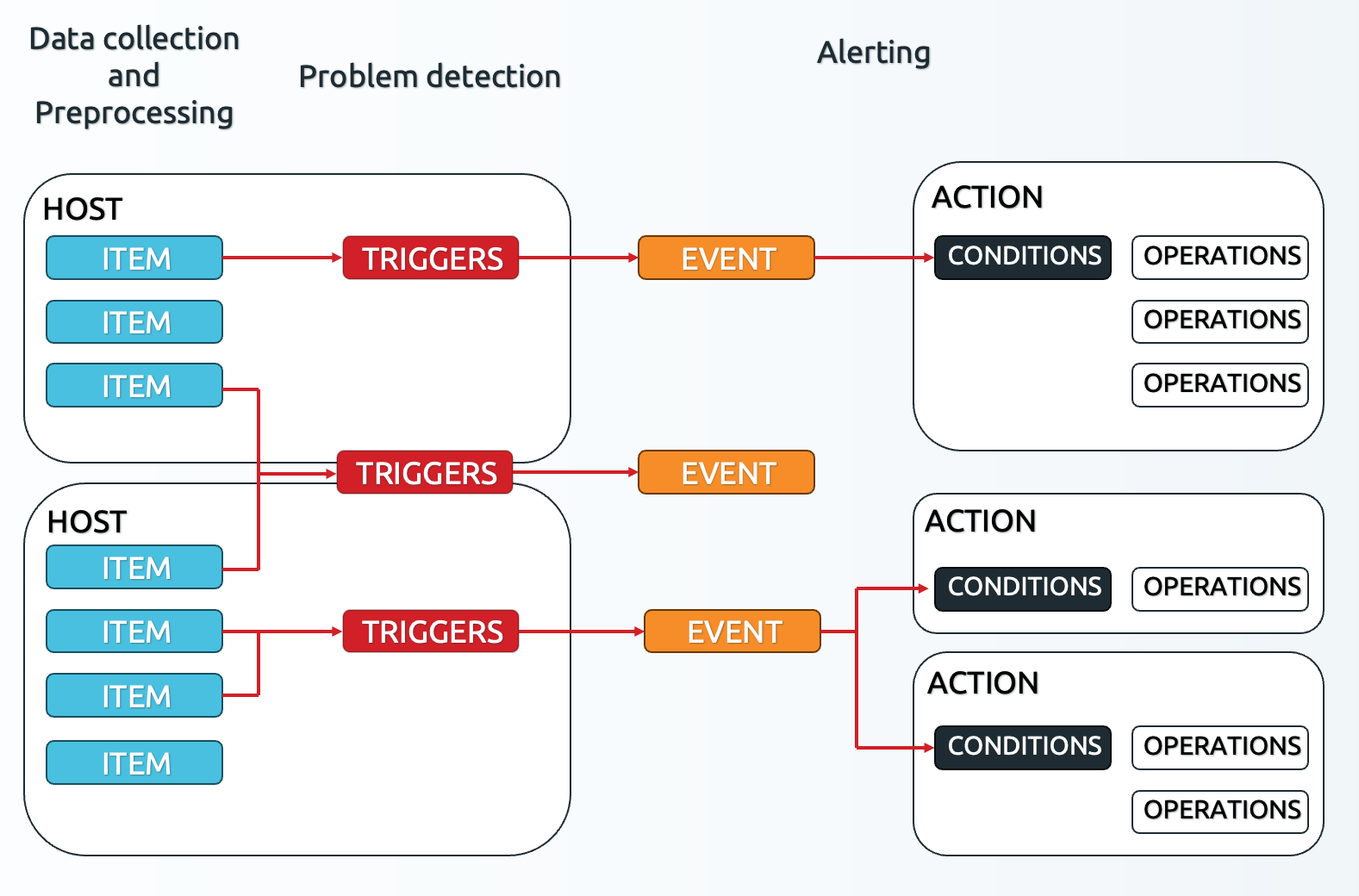

- Set up complex alerting mechanisms: Define triggers and actions that notify you of potential issues before they become critical, using various channels like email, SMS, and integrations with external services.

- Automate monitoring tasks: Leverage auto-discovery and auto-registration to keep up with changing environments without manual intervention.

- Customize and extend: Build custom scripts, templates, and integrations to tailor Zabbix to your specific needs.

System Requirements

Requirements

Zabbix has specific hardware and software requirements that must be met, and these requirements may change over time. They also depend on the size of your setup and the software stack you select. Before purchasing hardware or installing a database version, it's essential to consult the Zabbix documentation for the most up-to-date requirements for the version you plan to install. You can find the latest requirements https://www.zabbix.com/documentation/current/en/manual/installation/requirements. Make sure to select the correct Zabbix version from the list.

For smaller or test setups, Zabbix can comfortably run on a system with 2 CPUs and 8 GB of RAM. However, your setup size, the number of items you monitor, the triggers you create, and how long you plan to retain data will impact resource requirements. In today's virtualised environments, my advice is to start small and scale up as needed.

You can install all components (Zabbix server, database, web server) on a single machine or distribute them across multiple servers. For simplicity, take note of the server details:

| Component | IP Address |

|---|---|

| Zabbix Server | |

| Database Server | |

| Web Server |

Tip

Zabbix package names often use dashes (-) in their names, such as zabbix-get

or zabbix-sender, but the binaries themselves may use underscores (_),

like zabbix_sender or zabbix_server. This naming discrepancy can sometimes

be confusing, particularly if you are using packages from non-official Zabbix

repositories.

Always check if a binary uses a dash or an underscore when troubleshooting.

Warning

Starting from Zabbix 7.2, only MySQL (including its forks) and PostgreSQL are supported as back-end databases. Earlier versions of Zabbix also included support for Oracle Database; however, this support was discontinued with Zabbix 7.0 LTS, making it the last LTS version to officially support Oracle DB.

Basic OS Configuration

Operating systems, so many choices, each with its own advantages and loyal user base. While Zabbix can be installed on a wide range of platforms, documenting the process for every available OS would be impractical. To keep this book focused and efficient, we have chosen to cover only the most widely used options: Ubuntu, Red Hat and Suse based distributions.

Since not everyone has access to a Red Hat Enterprise Linux (RHEL) or a SUSE Linux Enterprise Server (SLES) subscription even though a developer account provides limited access we have opted for Rocky Linux respectively openSUSE Leap as a readily available alternative. For this book, we will be using Rocky Linux 9.x, openSUSE Leap 16 and Ubuntu LTS 24.04.x.

Note

OS installation steps are outside the scope of this book, but a default or even a minimal installation of your preferred OS should be sufficient. Please refrain from installing graphical user interfaces (GUIs) or desktop environments, as they are unnecessary for server setups and consume valuable resources.

Once you have your preferred OS installed, there are a few essential configurations to perform before proceeding with the Zabbix installation. Perform the following steps on all the servers that will host Zabbix components (i.e., Zabbix server, database server, and web server).

Update the System

Before installing the Zabbix components, or any new software, it's a best practice to ensure your operating system is up-to-date with the latest patches and security fixes. This will help maintain system stability and compatibility with the software you're about to install. Even if your OS installation is recent, it's still advisable to run an update to ensure you have the latest packages.

To update your system, run the following command based on your OS:

Update your system

Red Hat

SUSE

Ubuntu

What is apt, dnf or zypper

- DNF (Dandified YUM) is a package manager used in recent Red Hat-based systems (invoked as

dnf). - ZYpp (Zen / YaST Packages Patches Patterns Products) is the package manager

used on SUSE-based systems (invoked as

zypper) and - APT (Advanced Package Tool) is the package manager used on Debian/Ubuntu-based systems (invoked as

apt).

If you're using another distribution, replace dnf/zypper/apt with your appropriate

package manager, such as yum, pacman, emerge, apk or ... .

Do note that package names may also vary from distribution to distribution.

Tip

Regularly updating your system is crucial for security and performance. Consider setting up automatic updates or scheduling regular maintenance windows to keep your systems current.

Sudo

By default the Zabbix processes like the Zabbix server and agent run under their

own unprivileged user accounts (e.g., zabbix). However, there are scenarios where

elevated privileges are required, such as executing custom scripts or commands

that need root access.

Also throughout this book, we will perform certain administrative tasks that

require sudo on the system.

Usually, sudo is already present on most systems, but when you performed

a minimal installation of your OS, it might be missing. Therefore we need to

ensure it's installed.

This will also allow the Zabbix user to execute specific configured commands with elevated privileges without needing to switch to the root user entirely.

What is sudo

sudo (short for "superuser do") is a command-line utility that allows

permitted users to execute commands with the security privileges of another

user, typically the superuser (root). It is commonly used in Unix-like

operating systems to perform administrative tasks without needing to log in

as the root user.

To install sudo, run the following command based on your OS:

Install sudo

Red Hat

SUSE

Ubuntu

On Ubuntu, sudo is normally installed by default. Root access is managed

through sudo for the initial user created during installation.

If sudo is already installed, these commands will inform you that the latest version

is already present and no further action is needed. If not, the package manager

will proceed to install it.

Firewall

Next, we need to ensure that the firewall is installed and configured. A firewall is a crucial security component that helps protect your server from unauthorized access and potential threats by controlling incoming and outgoing network traffic based on predetermined security rules.

To install and enable the firewall, run the following command:

Install and enable the firewall

Red Hat

SUSEUbuntu

What is firewalld / ufw

Firewalld is the replacement for iptables in RHEL- and SUSE-based systems and allows changes to take effect immediately without needing to restart the service. If your distribution does not use Firewalld, refer to your OS documentation for the appropriate firewall configuration steps. Ubuntu makes use of UFW which is merely a frontend for iptables.

During the Zabbix installation in the next chapters, we will need to open specific ports in the firewall to allow communication between Zabbix components.

Alternatively to just opening ports, as we will do in the next chapters, you can also choose to define dedicated firewall zones for specific use cases. This approach enhances security by isolating services and restricting access based on trust levels. For example...

You can confirm the creation of the zone by executing the following command:

Verify the zone creation

Using zones in firewalld to configure firewall rules provides several advantages in terms of security, flexibility, and ease of management. Here’s why zones are beneficial:

- Granular Access Control :

- Firewalld zones allow different levels of trust for different network interfaces and IP ranges. You can define which systems are allowed to connect to PostgreSQL based on their trust level.

- Simplified Rule management:

- Instead of manually defining complex iptables rules, zones provide an organized way to group and manage firewall rules based on usage scenarios.

- Enhanced security:

- By restricting application access to a specific zone, you prevent unauthorized connections from other interfaces or networks.

- Dynamic configuration:

- Firewalld supports runtime and permanent rule configurations, allowing changes without disrupting existing connections.

- Multi-Interface support:

- If the server has multiple network interfaces, zones allow different security policies for each interface.

Bringing everything together to add a zone for, in this example, PostgreSQL it would look like this:

Firewalld with zone config for PostgreSQL database access

Where the source IP is the only address permitted to establish a connection to

the database.

If you wish to use zones when using firewalld, ensure to adapt the instructions in the following chapters accordingly.

Time Server

Another crucial step is configuring the time server and syncing the Zabbix server using an NTP client. Accurate time synchronization is vital for Zabbix, both for the server and the devices it monitors. If one of the hosts has an incorrect time zone, it could lead to confusion, such as investigating an issue in Zabbix that appears to have happened hours earlier than it actually did.

To install and enable chrony, our NTP client, use the following command:

Install NTP client

Red Hat

SUSE

Ubuntu

After installation, verify that Chrony is enabled and running by checking its status with the following command:

what is Chrony

Chrony is a modern replacement for ntpd, offering faster and

more accurate time synchronization. If your OS does not support

Chrony, consider using

ntpd instead.

Once Chrony is installed, the next step is to ensure the correct time zone is set.

You can view your current time configuration using the timedatectl command:

Check the time config

Ensure that the Chrony service is active (refer to the previous steps if needed). To set the correct time zone, first, you can list all available time zones with the following command:

This command will display a list of available time zones, allowing you to select the one closest to your location. For example:

List of all the timezones available

Once you've identified your time zone, configure it using the following command:

To verify that the time zone has been configured correctly, use the timedatectl

command again:

Check the time and zone

Note

Some administrators prefer installing all servers in the UTC time zone to ensure that server logs across global deployments are synchronized. Zabbix supports user-based time zone settings, which allows the server to remain in UTC while individual users can adjust the time zone via the interface if needed.

Verifying Chrony Synchronization

To ensure that Chrony is synchronizing with the correct time servers, you can run the following command:

The output should resemble:

Verify your chrony output

localhost:~ # chronyc

chrony version 4.2

Copyright (C) 1997-2003, 2007, 2009-2021 Richard P. Curnow and others

chrony comes with ABSOLUTELY NO WARRANTY. This is free software, and

you are welcome to redistribute it under certain conditions. See the

GNU General Public License version 2 for details.

chronyc>

Once inside the Chrony prompt, type the sources command to check the used time sources:

Example output:

Check your time server sources

chronyc> sources

MS Name/IP address Stratum Poll Reach LastRx Last sample

===============================================================================

^- 51-15-20-83.rev.poneytel> 2 9 377 354 +429us[ +429us] +/- 342ms

^- 5.255.99.180 2 10 377 620 +7424us[+7424us] +/- 37ms

^- hachi.paina.net 2 10 377 412 +445us[ +445us] +/- 39ms

^* leontp1.office.panq.nl 1 10 377 904 +6806ns[ +171us] +/- 2336us

In this example, the NTP servers in use are located outside your local region. It is recommended to switch to time servers in your country or, if available, to a dedicated company time server. You can find local NTP servers here: www.ntppool.org.

Updating Time Servers

To update the time servers, modify the Chrony configuration file:

Edit chrony config file

Red Hat

SUSE

On SUSE, the pool configuration is located in a separate file. You can edit that file directly or add a new configuration file in the same directory. In the latter case, ensure to disable or remove the existing pool configuration to avoid conflicts.Ubuntu

Replace the existing NTP server pool with one closer to your location.

Example of the current configuration:

Example ntp pool config

Change the pools you want to a local time server:

Change ntp pool config

After making this change, restart the Chrony service to apply the new configuration:

Verifying Updated Time Servers

Check the time sources again to ensure that the new local servers are in use:

Example of expected output with local servers:

Example output

chronyc> sources

MS Name/IP address Stratum Poll Reach LastRx Last sample

===============================================================================

^- ntp1.unix-solutions.be 2 6 17 43 -375us[ -676us] +/- 28ms

^* ntp.devrandom.be 2 6 17 43 -579us[ -880us] +/- 2877us

^+ time.cloudflare.com 3 6 17 43 +328us[ +27us] +/- 2620us

^+ time.cloudflare.com 3 6 17 43

This confirms that the system is now using local time servers.

Conclusion

As we have seen, before even considering the Zabbix packages, attention must be paid to the environment in which it will reside. A properly configured and up-to-date operating system, an open path through the firewall, and accurate timekeeping are not mere suggestions, but essential building blocks. Having laid this groundwork, we can now proceed with confidence to the Zabbix installation, knowing that the underlying system is prepared for the task.

Questions

- Why do you think accurate time synchronization is so crucial for a monitoring system like Zabbix?

- Now that the groundwork is laid, what do you anticipate will be the first step in the actual Zabbix installation process?

- As we move towards installing Zabbix, let's think about network communication. What key ports do you anticipate needing to allow through the firewall for the Zabbix server and agents to interact effectively?

Useful URLs

Preparing the system for Zabbix

Before installing any Zabbix component, we need to ensure that the server(s) meet the configuration requirements outlined in the previous section: System Requirements.

If you plan to install the Zabbix database, server and/or frontend on separate machines, prepare each server individually according to the instructions provided here. Also servers that will host a Zabbix Proxy, need to be prepared in the same way.

Disable SELinux on RHEL

Another critical step at this stage if you use Red Hat based systems is disabling SELinux, which can interfere with the installation and operation of Zabbix. We will revisit SELinux at the end of this chapter once our installation is finished.

To check the current status of SELinux, you can use the following command: `sestatus``

Selinux status

~# sestatus

SELinux status: enabled

SELinuxfs mount: /sys/fs/selinux

SELinux root directory: /etc/selinux

Loaded policy name: targeted

Current mode: enforcing

Mode from config file: enforcing

Policy MLS status: enabled

Policy deny_unknown status: allowed

Memory protection checking: actual (secure)

Max kernel policy version: 33

As shown, the system is currently in enforcing mode. To temporarily disable SELinux,

you can run the following command: setenforce 0

Disable SeLinux

~# setenforce 0

~# sestatus

SELinux status: enabled

SELinuxfs mount: /sys/fs/selinux

SELinux root directory: /etc/selinux

Loaded policy name: targeted

Current mode: permissive

Mode from config file: enforcing

Policy MLS status: enabled

Policy deny_unknown status: allowed

Memory protection checking: actual (secure)

Max kernel policy version: 33

Now, as you can see, the mode is switched to permissive. However, this change

is not persistent across reboots. To make it permanent, you need to modify the

SELinux configuration file located at /etc/selinux/config. Open the file and

replace enforcing with permissive.

Alternatively, you can achieve the same result more easily by running the following command:

Disable SeLinux permanent

Red Hat

This line will alter the configuration file for you. So when we run sestatus

again we will see that we are in permissive mode and that our configuration

file is also in permissive mode.

Verify selinux status again

~# sestatus

SELinux status: enabled

SELinuxfs mount: /sys/fs/selinux

SELinux root directory: /etc/selinux

Loaded policy name: targeted

Current mode: permissive

Mode from config file: permissive

Policy MLS status: enabled

Policy deny_unknown status: allowed

Memory protection checking: actual (secure)

Max kernel policy version: 33

Install the Zabbix repository

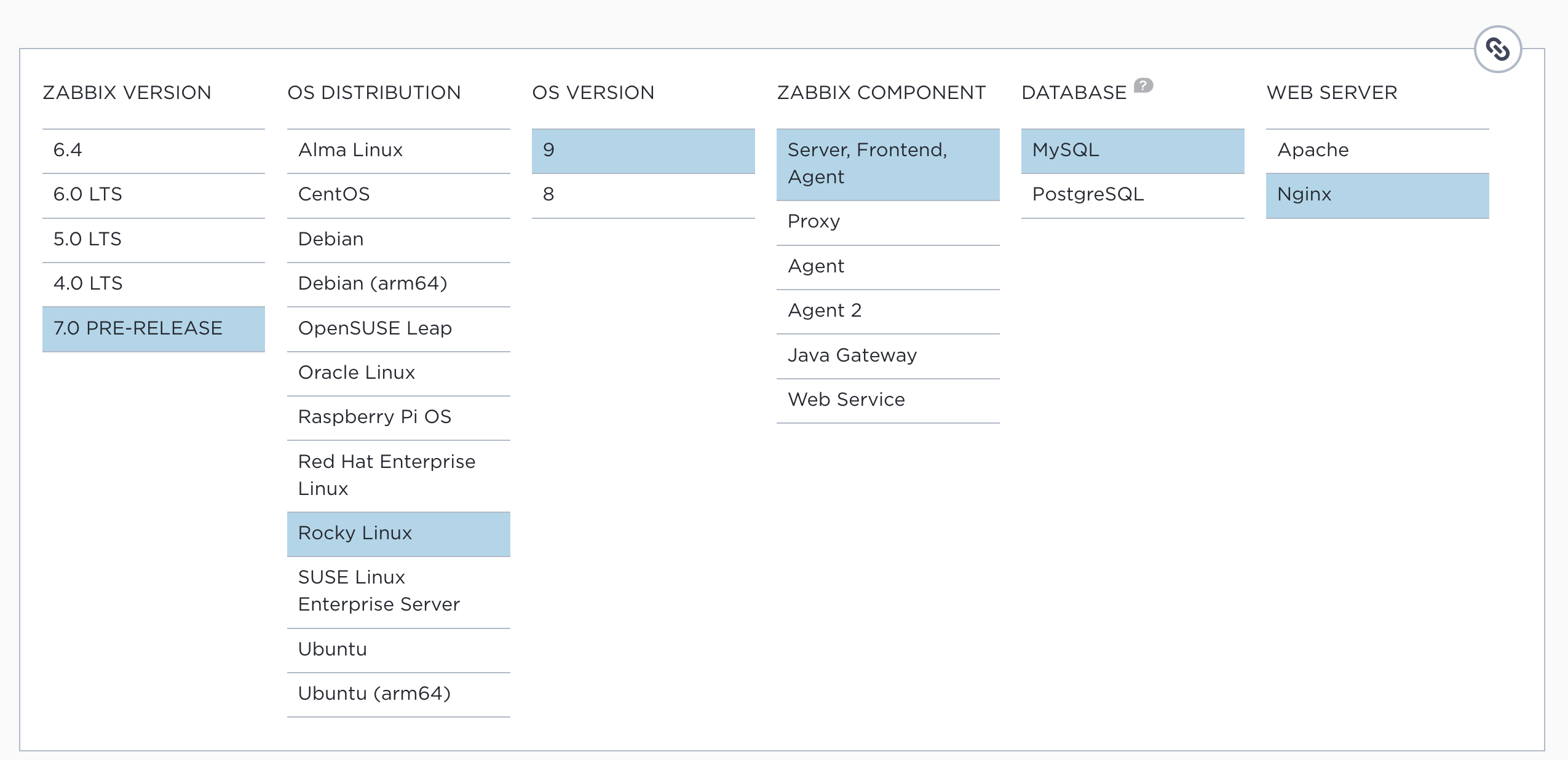

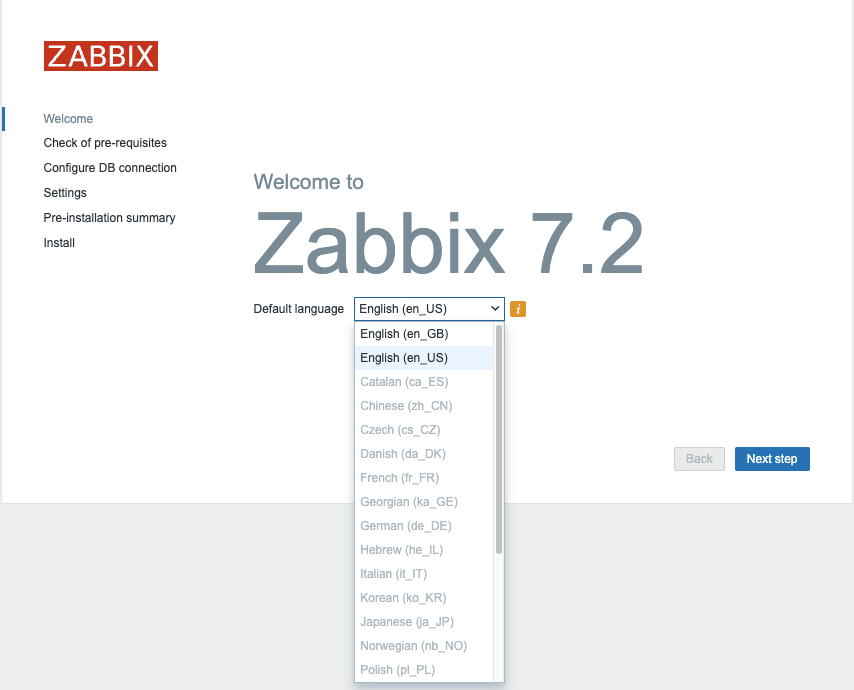

From the Zabbix Download page https://www.zabbix.com/download, select the appropriate Zabbix version you wish to install. In this case, we will be using Zabbix 8.0 LTS. Additionally, ensure you choose the correct OS distribution for your environment, which will be Rocky Linux 9, openSUSE Leap 16 or Ubuntu 24.04 in our case.

We will be installing the Zabbix Server along with NGINX as the web server for the front-end. Make sure to download the relevant packages for your chosen configuration.

1.2 Zabbix download

Red Hat specific remarks

If you make use of a RHEL based system like Rocky then the first step is to disable

the Zabbix packages provided by the EPEL repository, if it's installed on your system.

To do this, edit the /etc/yum.repos.d/epel.repo file and add the following statement

to disable the EPEL repository by default:

Tip

It's considered bad practice to keep the EPEL repository enabled all the time,

as it may cause conflicts by unintentionally overwriting or installing unwanted

packages. Instead, it's safer to enable the repository only when needed, by using

the following command during installations: dnf install --enablerepo=epel

OpenSUSE specific remarks

On openSUSE, Zabbix packages are also available in the default repo-oss repository.

Unlike RHEL-based systems, openSUSE does not provide a built-in way to exclude

specific packages from individual repositories. However, the Zabbix packages

included in the default repositories are typically one to two LTS versions behind

the latest releases. As a result, they are unlikely to interfere with your

installation unless they are already installed.

In the next step, we will configure the official Zabbix repositories. As long

as you select a Zabbix repository version newer than the packages available in

repo-oss, zypper will automatically install the most recent version.

Tip

If you have already installed Zabbix packages from the default repositories, it is recommended to either:

- Remove them before proceeding, or

- Upgrade them after adding the new Zabbix repositories, using the zypper

option

--allow-vendor-change.

Suse Linux Enterprise Server (SLES)

If you are using SLES, the Zabbix packages are not included in the default repositories. Therefore, you can proceed to add the official Zabbix repository without any concerns about conflicts with existing packages.

Adding the Zabbix repository

Next, we will install the Zabbix repository on our operating system. After adding the Zabbix repository, it is recommended to perform a repository cleanup to remove old cache files and ensure the repository metadata is up to date. You can do this by running:

Add the zabbix repo

Red Hat

rpm -Uvh https://repo.zabbix.com/zabbix/8.0/release/rocky/9/noarch/zabbix-release-latest-8.0.el9.noarch.rpm

dnf clean all

SUSE

rpm -Uvh --nosignature https://repo.zabbix.com/zabbix/8.0/release/sles/16/noarch/zabbix-release-latest-8.0.sles16.noarch.rpm

zypper --gpg-auto-import-keys refresh 'Zabbix Official Repository'

# Set the repository to auto-refresh to ensure it's always up to date

zypper modifyrepo --refresh 'Zabbix Official Repository'

Ubuntu

This will refresh the repository metadata and prepare the system for Zabbix installation.

What is a repository?

A repository in Linux is a configuration that allows you to access and install software packages. You can think of it like an "app store" where you find and download software from a trusted source, in this case, the Zabbix repository. Many repositories are available, but it's important to only add those you trust. The safest practice is to stick to the repositories provided by your operating system and only add additional ones when you're sure they are both trusted and necessary.

For our installation, the Zabbix repository is provided by the vendor itself, making it a trusted source. Another popular and safe repository for Red Hat-based systems is EPEL (Extra Packages for Enterprise Linux), which is commonly used in enterprise environments. However, always exercise caution when adding new repositories to ensure system security and stability.

Preparing the system for running containers using Podman

If you plan to run Zabbix components as containers using Podman, you need to ensure that Podman is installed and properly configured on your system. Below are the instructions for installing Podman on different operating systems.

You can skip this section if you do not plan to run any Zabbix components as containers on the current system.

Why Podman?

Podman is a popular containerization tool that allows you to run and manage containers without requiring a daemon like Docker. It is the recommended container engine on most modern distributions and offers several advantages over Docker.

Firstly, Podman enhances security by supporting rootless containers, allowing containers to run under non-privileged user accounts. Secondly, it integrates seamlessly with SELinux, enabling robust access control and policy enforcement. Thirdly, Podman works natively with systemd, which facilitates container lifecycle management through systemd units and quadlets.

Installing Podman

To be able to run containers using Podman, we first need to install Podman and some additional tools that will help us manage containers with SystemD.

Install podman and needed tools

Red Hat

SUSE

Ubuntu

Configure Podman for user-based container management

Next, we will create a podman-user which will be running the container(s). You

are free to use a different username, e.g. zabbix-proxy for a user that will

be running only zabbix-proxy in a container.

Create and init podman user

When your system has SELinux enabled, execute the following command as root.

SELinux: Set file context mapping

This command adds a SELinux file context mapping by creating an equivalence (-e)

between the default container storage directory /var/lib/containers and the user’s

Podman container storage path /home/podman/.local/share/containers. Essentially,

it tells SELinux to treat files in the user's container storage the same way it

treats files in the default system container storage, ensuring proper access

permissions under SELinux policy.

After defining new SELinux contexts, this command recursively (-R) applies

the correct SELinux security contexts to the files in the specified directory.

The -v flag enables verbose output, showing what changes are made. This ensures

that all files in the container storage directory have the correct SELinux labels

as defined by the previous semanage commands.

This command enables “linger” for the user podman. Linger allows user services

(such as containers managed by SystemD) to continue running even when the user

is not actively logged in. This is useful for running Podman containers in the

background and ensures that containerized proxies or other services remain active

after logout or system reboots.

As the final step in creating the Podman setup we need to to tell SystemD where the user-specific runtime files are stored:

Set XDG_RUNTIME_DIR environment variable for podman user

This line ensures that the XDG_RUNTIME_DIR environment variable is correctly set

for the podman user and is loaded in current and next sessions.

This variable points to the location where user-specific runtime

files are stored, including the systemd user session bus. Setting it is essential

for enabling systemctl --user to function properly with Podman-managed containers.

Your system is now prepared for running Zabbix components as containers using Podman.

Known issue waiting for network-online.target

In case the starting of your containers takes about 90s and then ultimately

fails to start. If you then see lines like this in your system logging

(journalctl):

systemd[1601]: Starting Wait for system level network-online.target as user....

sh[3128]: inactive

sh[3130]: inactive

sh[3132]: inactive

sh[3134]: inactive

sh[3136]: inactive

...

...

sh[3604]: inactive

sh[3606]: inactive

systemd[1601]: podman-user-wait-network-online.service: start operation timed out. Terminating.

systemd[1601]: podman-user-wait-network-online.service: Main process exited, code=killed, status=15/TERM

systemd[1601]: podman-user-wait-network-online.service: Failed with result 'timeout'.

systemd[1601]: Failed to start Wait for system level network-online.target as user..

Then you are hitting a known problem with the Podman Quadlets.

This is caused by the fact that the SystemD generated Quadlet service contains

a dependency to the system-wide special target network-online.target which

normally indicates the system's network is fully up and running. However on

certain Linux distriutions or with specific networking configurations the

system network components may not correctly notify SystemD that the network is

"online", causing network-online.target to never get activated. This in turn

makes that Podman will wait until it times out, thinking the network is not

yet available.

As a workaround, you can create a dummy system service that will trigger

network-online.target:

Known issue waiting for network-online.target

In case the starting of your containers takes about 90s and then ultimately

fail to start. If you then see lines like this in your system logging

(journalctl):

systemd[1601]: Starting Wait for system level network-online.target as user....

sh[3128]: inactive

sh[3130]: inactive

sh[3132]: inactive

sh[3134]: inactive

sh[3136]: inactive

...

...

sh[3604]: inactive

sh[3606]: inactive

systemd[1601]: podman-user-wait-network-online.service: start operation timed out. Terminating.

systemd[1601]: podman-user-wait-network-online.service: Main process exited, code=killed, status=15/TERM

systemd[1601]: podman-user-wait-network-online.service: Failed with result 'timeout'.

systemd[1601]: Failed to start Wait for system level network-online.target as user..

Then you are hitting a known problem with the Podman Quadlets.

This is caused by the fact that the SystemD generated Quadlet service contains

a dependency to the system-wide special target network-online.target which

normally indicates the system's network is fully up and running. However on

certain Linux distriutions or with specific networking configurations the

system network components may not correctly notify SystemD that the network is

"online", causing network-online.target to never get activated. This in turn

makes that Podman will wait until it times out, thinking the network is not

yet available.

As a workaround, you can create a dummy system service that will trigger

network-online.target:

Conclusion

With the preparation of your system for Zabbix now complete, you have successfully configured your environment for the installation of Zabbix components. We've covered the steps to add the official Zabbix repository to your system, preparing it for the installation of Zabbix server, database, and frontend components. And we have also prepared the system for running containers using Podman, if needed.

Your system is now ready for the next steps. In the following chapter, we will delve into the installation of the Zabbix components, guiding you through the process of setting up the Zabbix server, database, and frontend.

Questions

Useful URLs

Chapter 01 : Zabbix components and installation

Zabbix components, basic functions and installation

In this chapter, we expand on the foundational knowledge from the "Getting Started" section to provide a comprehensive guide for both beginners who are installing Zabbix for the first time and advanced users who seek to optimize their setup. We’ll not only cover the essential steps for a basic installation but also delve into the finer details of Zabbix architecture, components, and best practices.

We’ll start by walking through the installation process, ensuring you have a solid foundation to build on. From there, we'll move into the core components of Zabbix, what each one does, how they interact, and why they are crucial to your monitoring solution. You'll learn about subprocesses, their roles, and how they contribute to Zabbix efficiency and reliability.

Additionally, we’ll explore good architectural choices that can make or break your monitoring setup. Whether you're managing a small network or a large-scale infrastructure, making the right design decisions early on will pay dividends in scalability, performance, and maintenance.

This chapter is designed to cater to a wide range of readers. If you're simply looking to get Zabbix up and running, you'll find clear, step-by-step instructions. For those wanting to dive deeper, we'll provide detailed insights into how Zabbix functions under the hood, helping you make informed choices that align with your needs and future growth plans.

By the end of this chapter, you will have not only a working Zabbix installation but also a thorough understanding of its components and architecture, empowering you to leverage Zabbix to its fullest potential, regardless of the complexity of your environment.

Let’s embark on this detailed journey into Zabbix and equip ourselves with the knowledge to both start and optimize a powerful monitoring solution.

Zabbix architecture

In this chapter, we will walk through the process of installing the Zabbix server. There are many different ways to setup a Zabbix server. We will cover the most common setups with MariaDB and PostgreSQL on RHEL- and SLES-based distro's and Ubuntu.

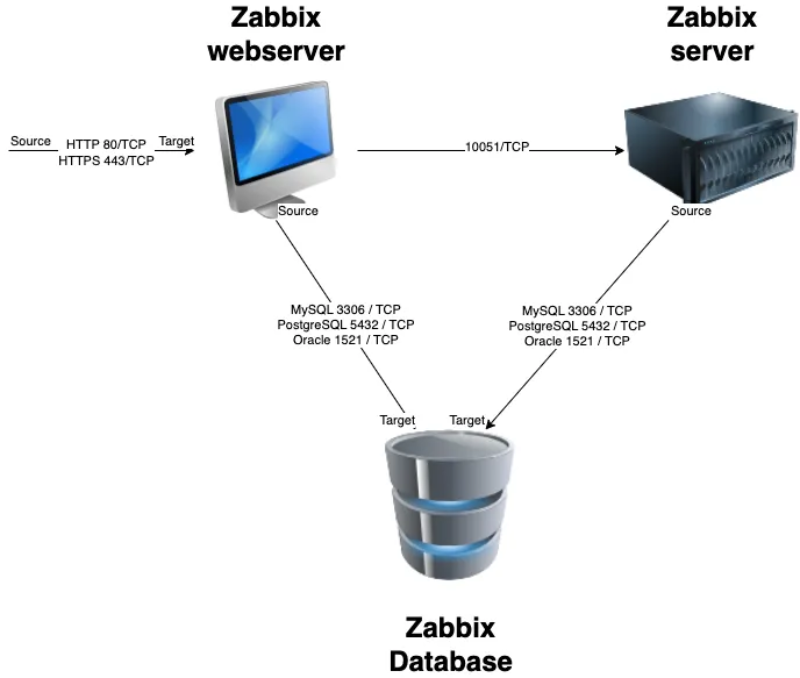

Before beginning the installation, it is important to understand the architecture of Zabbix. The Zabbix server is structured in a modular fashion, composed of three main components, which we will discuss in detail.

- The Zabbix server

- The Zabbix web server

- The Zabbix database

Creation of DB users

In our setup we will create 2 DB users zabbix-web and zabbix-srv. The

zabbix-web user will be used for the frontend to connect to our zabbix database.

The zabbix-srv user will be used by our zabbix server to connect to the database.

This allows us to limit the permissions for every user to only what is strictly

needed.

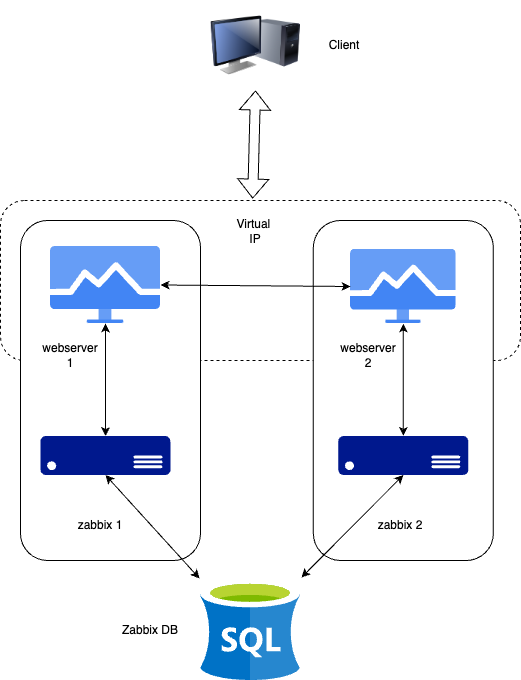

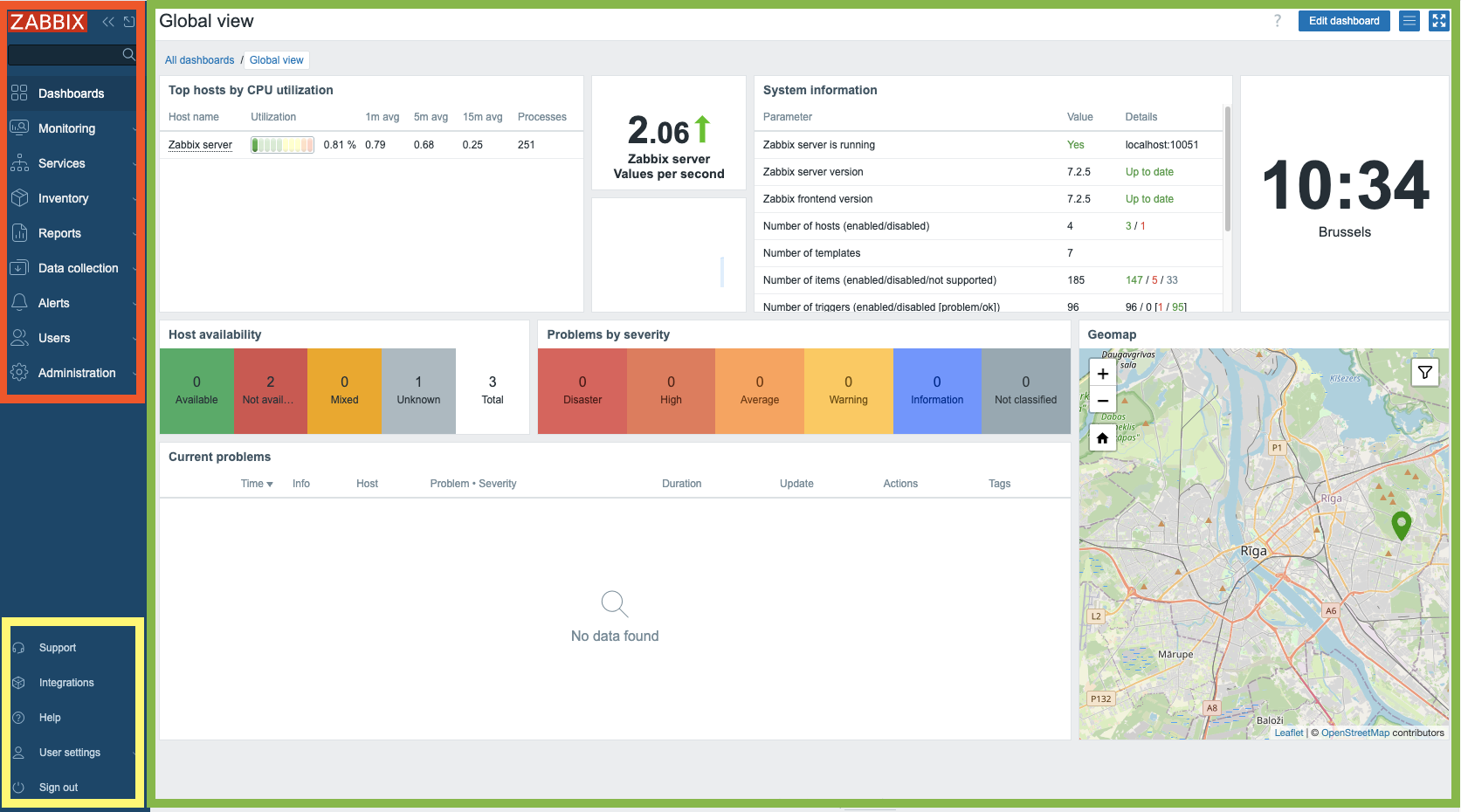

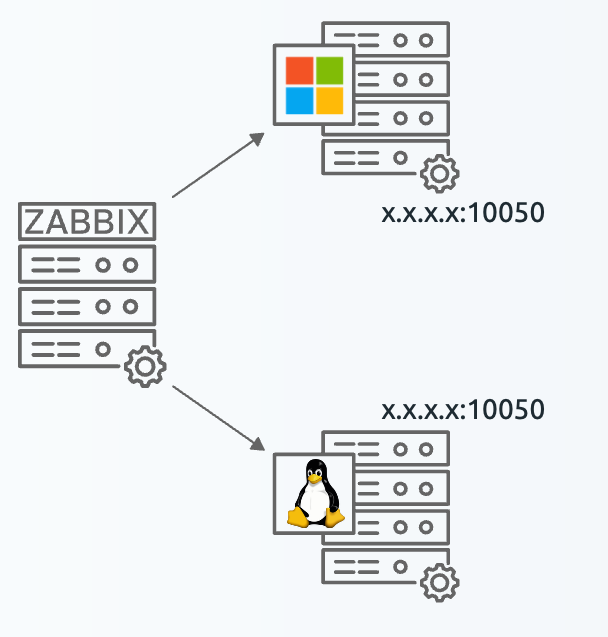

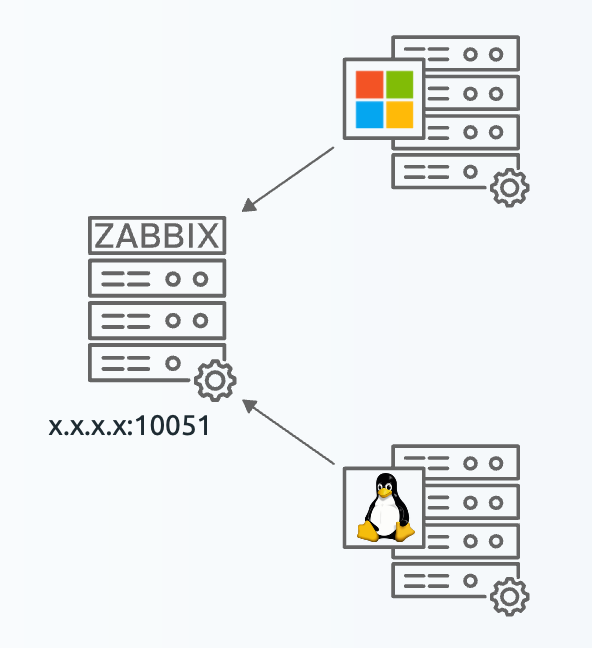

1.1 Zabbix basic split installation

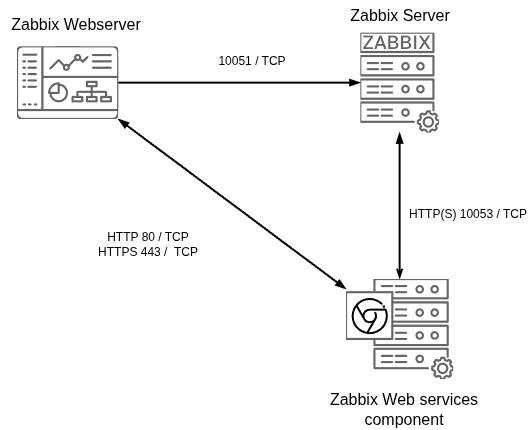

All of these components can either be installed on a single server or distributed across three separate servers. The core of the system is the Zabbix server, often referred to as the "brain." This component is responsible for processing trigger calculations and sending alerts. The database serves as the storage for the Zabbix server's configuration and all the data it collects. The web server provides the user interface (front-end) for interacting with the system. It is important to note that the Zabbix API is part of the front-end component, not the Zabbix server itself.

These components must function together seamlessly, as illustrated in the diagram above. The Zabbix server must read configurations and store monitoring data in the database, while the front-end needs access to read and write configuration data. Furthermore, the front-end must be able to check the status of the Zabbix server and retrieve additional necessary information to ensure smooth operation.

For our setup, we will be using two virtual machines (VMs): one VM will host both the Zabbix server and the Zabbix web front-end, while the second VM will host the Zabbix database.

Note

It is perfectly possible to install all components on one single VM or every component on a separate VM. The reason why we split the DB in our example is because the database will probably be the first component giving you performance headaches. It is also the component that needs some extra attention when we split it from the other components, so for this reason we have chosen in this example to split the database from the rest of the setup.

We will cover the following topics:

- Install our Database based on MariaDB.

- Install our Database based on PostgreSQL.

- Installing the Zabbix server.

- Install the frontend.

Database

Database choices

Choosing a Database Backend for Zabbix

A critical decision when managing Zabbix installations is selecting the database backend. Zabbix supports several database options: MySQL/Percona, MariaDB, PostgreSQL (including TimescaleDB), and Oracle (up to Zabbix 7.0).

Oracle Database deprecation

Zabbix 7.0 marks the final release to offer support for Oracle Database. Consequently, systems running Zabbix 7.0 or any prior version must undertake a database migration to either PostgreSQL, MySQL, or a compatible fork such as MariaDB before upgrading to a later Zabbix release. This migration is a mandatory step to ensure continued functionality and compatibility with future Zabbix versions.

All supported databases perform similarly under typical Zabbix workloads, and Zabbix treats them equally in terms of functionality. As such, the choice primarily depends on your or your team’s familiarity with a particular database system. One notable exception is TimescaleDB, a PostgreSQL extension optimized for time-series data. This makes it especially well-suited for monitoring applications like Zabbix, which handle large volumes of timestamped data.

In large-scale environments with high-frequency data collection, TimescaleDB can deliver significant performance benefits, including improved query speeds and built-in compression to reduce storage requirements. However, these advantages come with added complexity during installation and a few restrictions on historical data retention.

TimescaleDB installation

Given its advanced nature, TimescaleDB is not essential for most Zabbix users. As such, its installation is beyond the scope of this chapter. If you plan to use TimescaleDB, refer to Partitioning PostgreSQL with TimescaleDB for detailed guidance after installing PostgreSQL.

Choosing the Source for Database Installation

In this chapter we will focus on installing MariaDB and PostgreSQL, as they are the most commonly used databases with Zabbix. For MySQL or Percona installations, except for the package installation commands, the steps are very similar to MariaDB.

When installing MariaDB or PostgreSQL you must determine the source from which you will want to install the database server. Two primary options are available:

- Vendor-Provided Packages

-

These are included in the software repositories of most Linux distributions and are maintained by the distribution vendor.

Advantages:

- Simplified installation: Packages are readily available via the distribution’s package manager.

- Vendor support: For enterprise distributions (e.g., RHEL, SLES), active subscriptions include official support.

- Compatibility: Guaranteed integration with other system packages and dependencies.

- Distribution-specific optimizations: Includes tailored configurations (e.g., logrotate, bash completion,...).

- Long-term maintenance: Security and bug fixes are backported by the vendor for the duration of the distribution’s support lifecycle.

Disadvantages:

- Version lock-in: Major distribution upgrades may automatically introduce newer database versions, potentially requiring compatibility checks with Zabbix.

- Vendor modifications: Default configurations, log directories, and data paths may be altered to align with distribution-specific standards.

- Official MariaDB/PostgreSQL Repositories

-

These repositories provide packages directly from MariaDB/PostgreSQL and offer access to the latest stable releases.

Advantages:

- Up-to-date versions: Immediate access to the latest features, security patches, and bug fixes. However, make sure Zabbix is compatible with the chosen version.

- Enterprise support: Option to purchase MariaDB Enterprise or Enterprise DB respectively, which includes professional support and additional features.

Disadvantages:

- Manual version management: Users must proactively monitor and upgrade to new major versions to ensure continued security and bug fix coverage.

Database version compatibility

Whether you plan to use the OS vendor-provided packages or the official database-vendor packages, ensure that the database version is supported by your Zabbix version to avoid potential integration issues. Check the Zabbix documentation for the latest supported versions.

Before installing the database software, ensure that the server meets the configuration requirements and is prepared as outlined in the previous chapter: Getting started.

Conclusion

We have discussed the various database backends supported by Zabbix. We've also examined the advantages and disadvantages of using vendor-provided packages versus official repositories for installing MariaDB and PostgreSQL.

Armed with this knowledge, you are now ready to proceed with the installation of your chosen database backend. In the following chapters, we will guide you through the installation process for MariaDB or PostgreSQL, ensuring that your Zabbix instance is equipped with a robust and efficient database system.

Now that you have a clear understanding of the database options available, let's move on to the installation of your preferred database backend.

Questions

- Should I choose MySQL or PostgreSQL as the database back-end? Why?

- Should I use the packages provided by the OS vendor, or should I install database-vendor official packages? Why?

Useful URLs

Installing a MariaDB Database

In this section we will install the MariaDB server and -client packages. This will provide the necessary components to run and manage MariaDB as your Zabbix database backend.

If you prefer to use PostgreSQL as your database backend, you can skip this section and proceed to the Installing the PostgreSQL Database section.

MySQL/Percona

If you prefer to use MySQL or Percona instead of MariaDB, the installation

and configuration steps are very similar. Generally, you would replace

mariadb with mysql in the package names and commands.

Installing MariaDB Server and Client from OS Vendor-Provided Packages

To install the distribution default MariaDB server and client, execute the following command:

Install distribution version of Mariadb

Red Hat

SUSE

Ubuntu

This command will download and install both the server and client packages, enabling you to set up, configure, and interact with your MariaDB database. Once the installation is complete, you can proceed to the Starting the MariaDB database section.

Installing MariaDB Server and Client from Official MariaDB Repositories

If you prefer to install MariaDB from the official MariaDB repositories instead of the OS vendor-provided packages, the first step is to add the MariaDB repository to your system.

Adding the MariaDB Repository

To create the MariaDB repository file, execute the following command in your terminal:

Define the MariaDB repository

Red Hat

SUSE

Ubuntu

This will open a text editor where you can input the repository configuration details. Once the repository is configured, you can proceed with the installation of MariaDB using your package manager.

The latest config can be found here: https://mariadb.org/download/?t=repo-config

Here's an example configuration for MariaDB 11.4 repositories:

Mariadb repository configuration

Red Hat

# MariaDB 11.4 RedHatEnterpriseLinux repository list - created 2025-02-21 10:15 UTC

# https://mariadb.org/download/

[mariadb]

name = MariaDB

# rpm.mariadb.org is a dynamic mirror if your preferred mirror goes offline. See https://mariadb.org/mirrorbits/ for details.

# baseurl = https://rpm.mariadb.org/11.4/rhel/$releasever/$basearch

baseurl = https://mirror.bouwhuis.network/mariadb/yum/11.4/rhel/$releasever/$basearch

# gpgkey = https://rpm.mariadb.org/RPM-GPG-KEY-MariaDB

gpgkey = https://mirror.bouwhuis.network/mariadb/yum/RPM-GPG-KEY-MariaDB

gpgcheck = 1

SUSE

# MariaDB 11.4 openSUSE repository list - created 2025-12-29 14:34 UTC

# https://mariadb.org/download/

[mariadb]

name = MariaDB

# rpm.mariadb.org is a dynamic mirror if your preferred mirror goes offline. See https://mariadb.org/mirrorbits/ for details.

# baseurl = https://rpm.mariadb.org/11.4/opensuse/$releasever/$basearch

# baseurl = https://rpm.mariadb.org/11.4/opensuse/$releasever/$basearch

baseurl = https://mirror.bouwhuis.network/mariadb/yum/11.4/opensuse/$releasever/$basearch

# gpgkey = https://rpm.mariadb.org/RPM-GPG-KEY-MariaDB

gpgkey = https://mirror.bouwhuis.network/mariadb/yum/RPM-GPG-KEY-MariaDB

gpgcheck = 1

Ubuntu

# MariaDB 11.4 repository list - created 2025-02-21 11:42 UTC

# https://mariadb.org/download/

X-Repolib-Name: MariaDB

Types: deb

# deb.mariadb.org is a dynamic mirror if your preferred mirror goes offline. See https://mariadb.org/mirrorbits/ for details.

# URIs: https://deb.mariadb.org/11.4/ubuntu

URIs: https://mirror.bouwhuis.network/mariadb/repo/11.4/ubuntu

Suites: noble

Components: main main/debug

Signed-By: /etc/apt/keyrings/mariadb-keyring.pgp

After saving the file, ensure that everything is properly set up and that your preferred MariaDB version is compatible with your Zabbix version to avoid potential integration issues.

Installing MariaDB Server and Client

With the MariaDB repository configured, you are now ready to install the MariaDB server and client packages. This will provide the necessary components to run and manage your database.

To install the MariaDB server and client, execute the following command:

Install MariaDB from official repository

Red Hat

SUSE

sudo rpm --import https://mirror.bouwhuis.network/mariadb/yum/RPM-GPG-KEY-MariaDB

sudo zypper install MariaDB-server MariaDB-client

Ubuntu

This command will download and install both the server and client packages, enabling you to set up, configure, and interact with your MariaDB database. Once the installation is complete, you can proceed to the Starting the MariaDB database section.

Starting the MariaDB Database

Now that MariaDB is installed, we need to enable the service to start automatically upon boot and start it immediately. Use the following command to accomplish this:

This command will both enable and start the MariaDB service and since this will be the first time the service is started, it will initialize the database directory. With the MariaDB service now up and running, you can verify that the installation was successful by checking the version of MariaDB using the following command:

The expected output should resemble this:

MariaDB version example

To ensure that the MariaDB service is running properly, you can check its status with the following command:

You should see an output similar to this, indicating that the MariaDB service is active and running:

Mariadb service status example

localhost:~ $ sudo systemctl status mariadb

● mariadb.service - MariaDB database server

Loaded: loaded (/usr/lib/systemd/system/mariadb.service; enabled; preset: disabled)

Active: active (running) since Wed 2025-12-03 00:16:04 CET; 5s ago

Docs: man:mysqld(8)

https://mariadb.com/kb/en/library/systemd/

Process: 11148 ExecStartPre=/usr/lib/mysql/mysql-systemd-helper install (code=exited, status=0/SUCCESS)

Process: 11155 ExecStartPre=/usr/lib/mysql/mysql-systemd-helper upgrade (code=exited, status=0/SUCCESS)

Main PID: 11162 (mysqld)

Status: "Taking your SQL requests now..."

Tasks: 18 (limit: 4670)

CPU: 340ms

CGroup: /system.slice/mariadb.service

└─11162 /usr/sbin/mysqld --defaults-file=/etc/my.cnf --user=mysql --socket=/run/mysql/mysql.sock

Dec 03 00:16:04 localhost.localdomain systemd[1]: [Note] Plugin 'FEEDBACK' is disabled.

Dec 03 00:16:04 localhost.localdomain systemd[1]: [Note] InnoDB: Loading buffer pool(s) from /var/lib/mysql/ib_buffer_pool

Dec 03 00:16:04 localhost.localdomain systemd[1]: [Note] Server socket created on IP: '127.0.0.1', port: '3306'.

Dec 03 00:16:04 localhost.localdomain systemd[1]: [Note] /usr/sbin/mysqld: ready for connections.

Dec 03 00:16:04 localhost.localdomain systemd[1]: Version: '10.11.14-MariaDB' socket: '/run/mysql/mysql.sock' port: 3306 MariaDB package

Dec 03 00:16:04 localhost.localdomain systemd[1]: [Note] InnoDB: Buffer pool(s) load completed at 251203 0:16:04

Dec 03 00:16:04 localhost.localdomain systemd[1]: Started MariaDB database server.

This confirms that your MariaDB server is up and running, ready for further configuration.

Securing the MariaDB Database

To enhance the security of your MariaDB server, it's essential to remove unnecessary test databases, anonymous users, and set a root password. This can be done using the mariadb-secure-installation script, which provides a step-by-step guide to securing your database.

Run the following command:

The mariadb-secure-installation script will guide you through several key steps:

- Set a root password if one isn't already set.

- Remove anonymous users.

- Disallow remote root logins.

- Remove the test database.

- Reload the privilege tables to ensure the changes take effect.

Once complete, your MariaDB instance will be significantly more secure.

mariadb-secure-installation example output

localhost:~ $ sudo mariadb-secure-installation

NOTE: RUNNING ALL PARTS OF THIS SCRIPT IS RECOMMENDED FOR ALL MariaDB

SERVERS IN PRODUCTION USE! PLEASE READ EACH STEP CAREFULLY!

In order to log into MariaDB to secure it, we'll need the current

password for the root user. If you've just installed MariaDB, and

haven't set the root password yet, you should just press enter here.

Enter current password for root (enter for none):

OK, successfully used password, moving on...

Setting the root password or using the unix_socket ensures that nobody

can log into the MariaDB root user without the proper authorisation.

You already have your root account protected, so you can safely answer 'n'.

Switch to unix_socket authentication [Y/n] n

... skipping.

You already have your root account protected, so you can safely answer 'n'.

Change the root password? [Y/n] y

New password:

Re-enter new password:

Password updated successfully!

Reloading privilege tables..

... Success!

By default, a MariaDB installation has an anonymous user, allowing anyone

to log into MariaDB without having to have a user account created for

them. This is intended only for testing, and to make the installation

go a bit smoother. You should remove them before moving into a

production environment.

Remove anonymous users? [Y/n] y

... Success!

Normally, root should only be allowed to connect from 'localhost'. This

ensures that someone cannot guess at the root password from the network.

Disallow root login remotely? [Y/n] y

... Success!

By default, MariaDB comes with a database named 'test' that anyone can

access. This is also intended only for testing, and should be removed

before moving into a production environment.

Remove test database and access to it? [Y/n] y

- Dropping test database...

... Success!

- Removing privileges on test database...

... Success!

Reloading the privilege tables will ensure that all changes made so far

will take effect immediately.

Reload privilege tables now? [Y/n] y

... Success!

Cleaning up...

All done! If you've completed all of the above steps, your MariaDB

installation should now be secure.

Thanks for using MariaDB!

You are now ready to configure the database for Zabbix.

Creating the Zabbix database instance

With MariaDB now set up and secured, we can move on to creating the database for Zabbix. This database will store all the necessary data related to your Zabbix server, including configuration information and monitoring data.

Follow these steps to create the Zabbix database:

Log in to the MariaDB shell as the root user: You'll be prompted to enter the root password that you set during the mariadb-secure-installation process.

Once you're logged into the MariaDB shell, run the following command to create a database for Zabbix:

Create the database

What is utf8mb4

utf8mb4 is a proper implementation of UTF-8 in MySQL/MariaDB, supporting all Unicode characters, including emojis. The older utf8 charset in MySQL/MariaDB only supports up to three bytes per character and is not a true UTF-8 implementation, which is why utf8mb4 is recommended.

This command creates a new database named zabbix with the UTF-8 character set,

which is required for Zabbix.

Create a dedicated user for Zabbix and grant the necessary privileges: Next, you

need to create a user that Zabbix will use to access the database. Replace <password>

with a strong password of your choice.

Create users and grant privileges

MariaDB [(none)]> CREATE USER 'zabbix-web'@'<zabbix frontend ip>' IDENTIFIED BY '<password>';

MariaDB [(none)]> CREATE USER 'zabbix-srv'@'<zabbix server ip>' IDENTIFIED BY '<password>';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON zabbix.* TO 'zabbix-srv'@'<zabbix server ip>';

MariaDB [(none)]> GRANT SELECT, UPDATE, DELETE, INSERT ON zabbix.* TO 'zabbix-web'@'<zabbix server ip>';

MariaDB [(none)]> FLUSH PRIVILEGES;

- Replace

<zabbix server ip>with the actual IP address of your server where the Zabbix server will be installed. - Replace

<zabbix frontend ip>with the actual IP address of your server where the Zabbix frontend will be installed.

If both components are installed on the same server, use the same IP address.

Tip

If your Zabbix server, frontend and database are on the same machine, you can replace

<zabbix server ip> and <zabbix frontend ip> with localhost or 127.0.0.1.

This creates new users zabbix-web and zabbix-srv, grants them access to the

Zabbix database, and ensures that the privileges are applied immediately.

At this point, your Zabbix database is ready, but before it can actually be used by Zabbix, we still need to populate the database with the necessary tables and initial data, but that will be covered in the next section when we install the Zabbix server.

If you intent to install Zabbix server on a different machine than the one hosting the database you will need to open the host firewall to allow incoming connections to the database server. By default, MariaDB listens on port 3306.

Add firewall rules

Red Hat / SUSE

Ubuntu

Populate the Zabbix database

During the installation of the database software earlier, we created the necessary users and database for Zabbix, however, Zabbix expects certain tables, schemas, images, and other elements to be present in the database. To set up the database correctly, we need to populate it with the required schema.

First we need to install the Zabbix SQL scripts that contain the required import scripts for the database.

Install SQL scripts

Red Hat

SUSE

Ubuntu

Warning

When using a recent version of MySQL or MariaDB as the database backend for

Zabbix, you may encounter issues related to the creation of triggers during

the schema import process. This is particularly relevant if binary logging

is enabled on your database server. (Binary logging is often enabled by default)

To address this, you need to set the log_bin_trust_function_creators option to 1

in the MySQL/MariaDB configuration file or temporarily at runtime.

This allows non-root users to create stored functions and triggers without requiring

SUPER privileges, which are restricted when binary logging is enabled.

Normally we won't need the setting after the initial import of the Zabbix schema is done, so we will disable it again after the import is complete.

Now lets upload the data from zabbix (db structure, images, user, ... )

for this we make use of the user zabbix-srv and we upload it all in our DB zabbix.

Populate the database

Warning

Depending on the speed of your hardware or virtual machine, the process may take anywhere from a few seconds to several minutes without any visual feedback after entering the root password.

Please be patient and avoid cancelling the operation; just wait for the linux prompt to reappear.

Note

Zabbix seems to like to change the locations of the script to populate the DB every version or even in between versions. If you encounter an error take a look at the Zabbix documentation, there is a good chance that some location was changed.

Once the import of the Zabbix schema is complete, you should no longer need the

log_bin_trust_function_creators global parameter. It is a good practice to remove

it for security reasons.

To revert the global parameter back to 0, use the following command in the MySQL/MariaDB shell:

Disable function log_bin_trust again

This command will disable the setting, ensuring that the servers security posture remains robust.

This concludes our installation of the MariaDB. You can now proceed to Preparing the Zabbix server.

Conclusion

With the successful installation and configuration of MariaDB as the database backend for Zabbix, you now have a robust foundation for your monitoring solution. We've covered the installation of MariaDB from both vendor-provided packages and official repositories, securing the database, creating the necessary Zabbix database and users, and populating the database with the required schema and initial data.

Your Zabbix environment is now ready for the next stages of setup and configuration.

Questions

- What version of MariaDB should I install for compatibility and stability?

- What port does my DB use ?

- Which database users did I create and why?

Useful URLs

Installing a PostgreSQL database

Alternatively to MariaDB/MySQL, you can choose to use PostgreSQL as the database backend for Zabbix. Similar to MariaDB, PostgreSQL can be installed using either the OS vendor-provided packages or the official PostgreSQL repositories.

If you already have installed MariaDB in the previous section, you can skip this section.

As of writing PostgreSQL 13-18 are supported by Zabbix. Check the Zabbix documentation for an up-to-date list of supported versions for your Zabbix version. Usually it's a good idea to go with the latest version that is supported by Zabbix.

TimescaleDB extension

Zabbix also supports the extension TimescaleDB but due to its advanced nature, we won't cover it in this chapter. Refer to Partitioning PostgreSQL with TimescaleDB for detailed instructions on that topic.

Do note that if you want to use TimescaleDB RPM packages provided by Timescale, you will need to install PostgreSQL from the official PostgreSQL repositories instead of the OS vendor-provided packages. If you choose to install PostgreSQL from the OS vendor-provided packages, you will need to compile and install the TimescaleDB extension from source.

Installing PostgreSQL Server and Client from OS Vendor-Provided Packages

To install the distribution default PostgreSQL server, execute the following commands:

Install the Postgres server

Red Hat

SUSE

Ubuntu

This command will download and install both the server and client packages, enabling you to set up, configure, and interact with your PostgreSQL database.

Database initialization required on Red Hat

Due to policies for Red Hat family distributions, the PostgreSQL service does not initialize an empty database required for PostgreSQL to function. So for Red Hat we need to initialize an empty database before continuing:

Red Hat

On SUSE and Ubuntu the OS provided SystemD service will automatically initialize an empty database on first startup.

Once the installation is complete, you can proceed to the Starting the PostgreSQL Database section.

Installing PostgreSQL from Official PostgreSQL Repositories

If you prefer to install PostgreSQL from the official PostgreSQL repositories instead of the OS vendor-provided packages, the first step is to add the PostgreSQL repository to your system.

Adding the PostgreSQL Repository

Set up the PostgreSQL repository with the following commands:

Check https://www.postgresql.org/download/linux/ for more information.

Add PostgreSQL repo

Red Hat

# Install the repository RPM:

dnf install https://download.postgresql.org/pub/repos/yum/reporpms/EL-9-x86_64/pgdg-redhat-repo-latest.noarch.rpm

# Disable the built-in PostgreSQL module:

dnf -qy module disable postgresql

SUSE

# Import the repository signing key:

rpm --import https://zypp.postgresql.org/keys/PGDG-RPM-GPG-KEY-SLES16

# Install the repository RPM:

zypper install https://download.postgresql.org/pub/repos/zypp/reporpms/SLES-16-x86_64/pgdg-suse-repo-latest.noarch.rpm

# Update the package lists:

zypper refresh

openSUSE Leap

Since the official PostgreSQL packages are specifically built for use on SUSE Linux Enterprise Server (SLES), you will get an error trying to install the repository on openSUSE Leap. We can however safely ignore this problem by choosing to "break the package by ignoring some of its dependencies" as long as you match the SLES version with your openSUSE version:

Problem: 1: nothing provides 'sles-release' needed by the to be installed pgdg-suse-repo-42.0-48PGDG.noarch

Solution 1: do not install pgdg-suse-repo-42.0-48PGDG.noarch

Solution 2: break pgdg-suse-repo-42.0-48PGDG.noarch by ignoring some of its dependencies

Choose from above solutions by number or cancel [1/2/c/d/?] (c): 2

Suse Linux Enterprise Server

On SUSE Linux Enterprise Server (SLES), ensure you are subscribed to the "SUSE Package Hub extension" repository to access necessary dependency packages required for the Official PostgreSQL installation. On SLES 15 you will also need the "Desktop Applications Module":

Ubuntu

# Import the repository signing key:

sudo apt install curl ca-certificates

sudo install -d /usr/share/postgresql-common/pgdg

sudo curl -o /usr/share/postgresql-common/pgdg/apt.postgresql.org.asc --fail https://www.postgresql.org/media/keys/ACCC4CF8.asc

# Create the repository configuration file:

sudo sh -c 'echo "deb [signed-by=/usr/share/postgresql-common/pgdg/apt.postgresql.org.asc] https://apt.postgresql.org/pub/repos/apt $(lsb_release -cs)-pgdg main" > /etc/apt/sources.list.d/pgdg.list'

# Update the package lists:

sudo apt update

Installing the PostgreSQL Server and Client

With the PostgreSQL repositories configured, you are now ready to install the PostgreSQL server and client packages. This will provide the necessary components to run and manage your database.

Install PostgreSQL from official repositories

Red Hat

SUSE

Ubuntu

This command will download and install both the server and client packages, enabling you to set up, configure, and interact with your PostgreSQL database.

Next, before we can start the PostgreSQL server we need to initialize a new empty database:

Once the installation is complete, you can proceed to the Starting the PostgreSQL Database section.

Starting the PostgreSQL Database

Now that PostgreSQL is installed, we need to enable the service to start automatically upon boot as well as start it immediately. Use the following command to accomplish this:

Enable and start PostgreSQL service

for OS-provided packages

for official PostgreSQL packages:

This command will both enable and start the PostgreSQL service. With the service now up and running, you can verify that the installation was successful by checking the version of PostgreSQL using the following command:

The expected output should resemble this:

To ensure that the PostgreSQL service is running properly, you can check its status with the following command:

Get PostgreSQL status

for OS-provided packages

for official PostgreSQL packages:

You should see an output similar to this, indicating that the PostgreSQL service is active and running:

PostgreSQL service status example

localhost:~ $ sudo systemctl status postgresql-17

● postgresql-17.service - PostgreSQL 17 database server

Loaded: loaded (/usr/lib/systemd/system/postgresql-17.service; enabled; preset: disabled)

Active: active (running) since Mon 2025-12-29 17:24:07 CET; 6s ago

Invocation: 43ba47dfee5b415db223e3452c3cfacc

Docs: https://www.postgresql.org/docs/17/static/

Process: 11131 ExecStartPre=/usr/pgsql-17/bin/postgresql-17-check-db-dir ${PGDATA} (code=exited, status=0/SUCCESS)

Main PID: 11137 (postgres)

Tasks: 7 (limit: 4672)

CPU: 471ms

CGroup: /system.slice/postgresql-17.service

├─11137 /usr/pgsql-17/bin/postgres -D /var/lib/pgsql/17/data/

├─11138 "postgres: logger "

├─11139 "postgres: checkpointer "

├─11140 "postgres: background writer "

├─11142 "postgres: walwriter "

├─11143 "postgres: autovacuum launcher "

└─11144 "postgres: logical replication launcher "

Dec 29 17:24:07 localhost.localdomain systemd[1]: Starting PostgreSQL 17 database server...

Dec 29 17:24:07 localhost.localdomain postgres[11137]: 2025-12-29 17:24:07.650 CET [11137] LOG: redirecting log output to logging co>

Dec 29 17:24:07 localhost.localdomain postgres[11137]: 2025-12-29 17:24:07.650 CET [11137] HINT: Future log output will appear in di>

Dec 29 17:24:07 localhost.localdomain systemd[1]: Started PostgreSQL 17 database server.

This confirms that your PostgreSQL server is up and running, ready for further configuration.

Securing the PostgreSQL database

PostgreSQL handles access permissions differently from MySQL and MariaDB.

PostgreSQL relies on a file called pg_hba.conf to manage who can access the database,

from where, and what encryption method is allowed for authentication.

About pg_hba.conf

Client authentication in PostgreSQL is configured through the pg_hba.conf

file, where "HBA" stands for Host-Based Authentication. This file specifies

which users can access the database, from which hosts, and how they are authenticated.

For further details, you can refer to the official PostgreSQL documentation."

https://www.postgresql.org/docs/current/auth-pg-hba-conf.html

Add the following lines, the order here is important.

Edit the pg_hba file

Red Hat / SUSE

# for OS-provided packages

vi /var/lib/pgsql/data/pg_hba.conf

# for official packages

vi /var/lib/pgsql/17/data/pg_hba.conf

Ubuntu

Location of pg_hba file

If you don't find the pg_hba.conf and postgres.conf files in the above

mentioned location you can ask PostgreSQL itself for the location using

this command (provided that PostgreSQL is currently running):

The resulting pg_hba file should look like :

Pg_hba example

# "local" is for Unix domain socket connections only

local zabbix zabbix-srv scram-sha-256

local all all peer

# IPv4 local connections

host zabbix zabbix-srv <ip from zabbix server/24> scram-sha-256

host zabbix zabbix-web <ip from zabbix server/24> scram-sha-256

host all all 127.0.0.1/32 scram-sha-256

# IPv6 local connections:

host zabbix zabbix-srv ::1/128 scram-sha-256

host zabbix zabbix-web ::1/128 scram-sha-256

host all all ::1/128 ident

Ensure to keep the order of the entries

The order of the entries in the pg_hba.conf file is crucial, as PostgreSQL

processes these rules sequentially. Ensure that the specific rules for the

zabbix-srv and zabbix-web users are placed before any broader rules like

the default all user rules that could potentially override them.

After we changed the pg_hba.conf file don't forget to restart postgres otherwise the settings

will not be applied. But before we restart, let us also edit the file postgresql.conf

and allow our database to listen on our network interface for incoming connections

from the Zabbix server. PostgreSQL will by default only allow connections from a unix socket.

Edit postgresql.conf file

Red Hat / SUSE

# for OS-provided packages

vi /var/lib/pgsql/data/postgresql.conf

# for official packages

vi /var/lib/pgsql/17/data/postgresql.conf

Ubuntu

Locate the following line:

and replace it with:

Note

This will enable PostgreSQL to accept connections from any network interface, not just the local machine. In production it's probably a good idea to limit who can connect to the DB.

After making this change, restart the PostgreSQL service to apply the new settings:

Restart the DB server

for OS-provided packages

for official packages

Tip

If the service fails to restart, review the pg_hba.conf file for any syntax errors,

as incorrect entries here may prevent PostgreSQL from starting.

Creating the Zabbix database instance

With the necessary packages installed, you are now ready to create the Zabbix database and users for both the server and frontend.

The PostgreSQL packages automatically create a default postgres linux-user

during installation which has administrative privileges on the PostgreSQL instance.

To administer the database, you will need to execute commands as the postgres user.

First, create the Zabbix server database user (also referred to as a "role" in PostgreSQL):

Create server users

Next, create the Zabbix frontend user, which will be used to connect to the database:

Create front-end user

Now with the users created, the next step is to create the Zabbix database.

Execute the following command to create the database zabbix with the owner set to

zabbix-srv and the character encoding set to Unicode as required by Zabbix:

What is this 'template0'?

In PostgreSQL, template0 is a default database template that serves as a pristine

copy of the database system. When creating a new database using template0,

it ensures that the new database starts with a clean slate, without any

pre-existing objects or configurations that might be present in other templates.

This is particularly useful when you want to create a database with specific

settings or extensions without inheriting any unwanted elements from other templates.

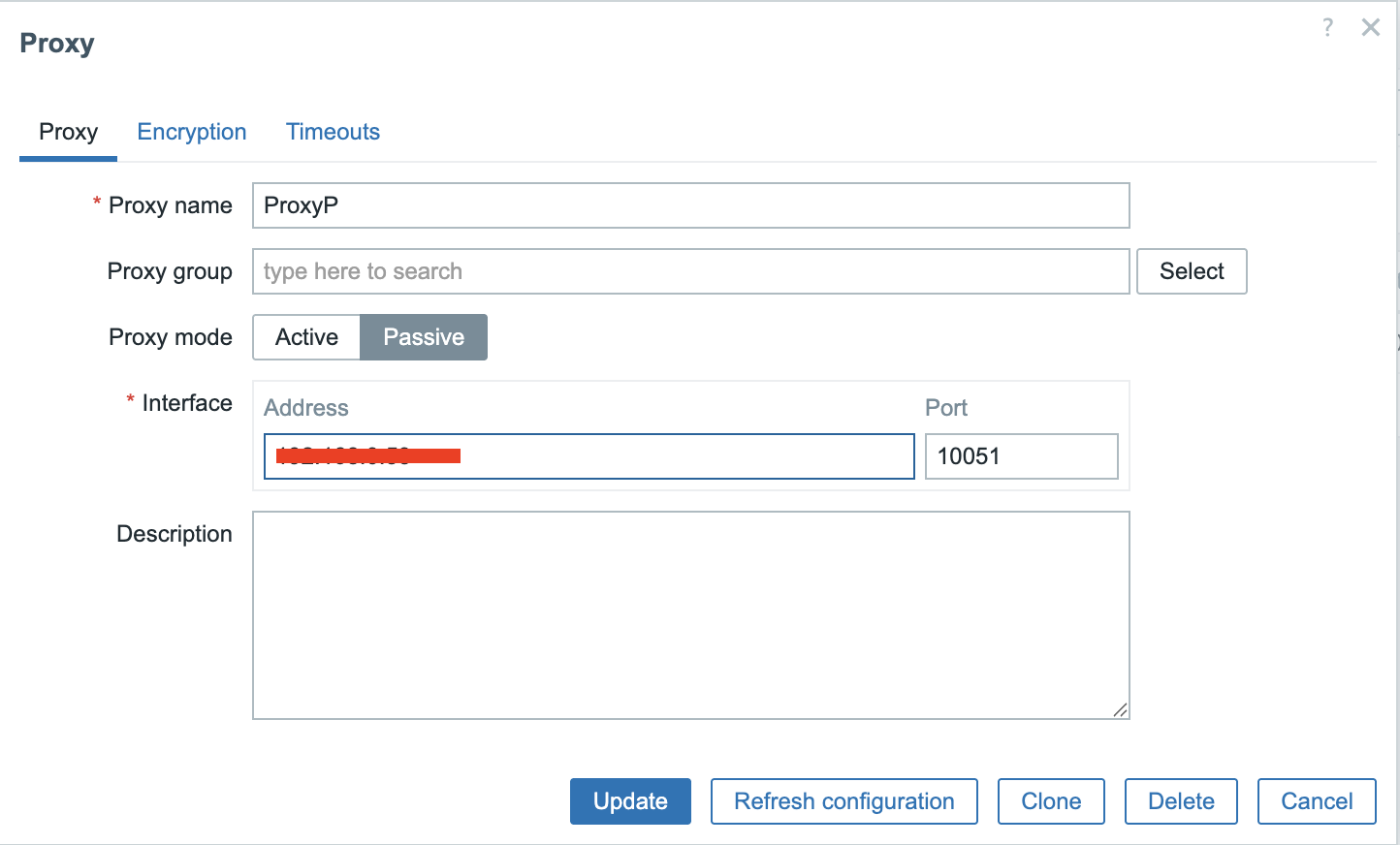

Once the database is created, you should verify the connection and ensure that